Both Quanta Magazine and New Scientist reported on some renewed interest in an old idea. It was an approach to particle physics, proposed by theoretical physicist Geoffrey Chew in the 1960s, that ignored questions about which particles were most elementary and put a major portion of the weight of discovery on mathematics. Chew expected that information about the strong interaction could be derived from looking at what happens when particles of any sort collide. And he proposed S-matrix theory as a substitute for quantum field theory. S-matrix theory contained no notion of space and time. These were replaced by the abstract mathematical properties of the S-matrix, which had been developed by Werner Heisenberg in 1943 as a principle of particle interactions.

New research, with a similarly democratic approach to matter, is concerned with mathematically modeling phase transitions – those moments when matter undergoes a significant transformation. The hope is that what is learned about phase transitions could tell us quite a lot about the fundamental nature of all matter. As New Scientist author, Gabriel Popkin, tells us:

Whether it’s the collective properties of electrons that make a material magnetic or superconducting, or the complex interactions by which everyday matter acquires mass, a host of currently intractable problems might all follow the same mathematical rules. Cracking this code could help us on the way to everything from more efficient transport and electronics to a new, shinier, quantum theory of gravity.

Toward this end, in 1944, Norwegian physicist Lars Onsager solved the problem of modeling material that loses magnetism when heated above a certain temperature. While his was a 2-dimensional model, it has none-the-less been used to simulate the flipping of various physical states from the spread of an infectious disease to neuron signaling in the brain. It’s referred to as the Ising model, named for Ernst Ising, who first investigated the idea in his PhD thesis but without success.

In the 1960s, Russian theorist Alexander Polyakov began studying how fundamental particle interactions might undergo phase transitions, motivated by the fact that the 2D Ising model, and the equations that describe the behavior of elementary particles, shared certain symmetries. And so he worked backwards from the symmetries to the equations.

Popkin explains:

Polyakov’s approach was certainly a radical one. Rather than start out with a sense of what the equations describing the particle system should look like, Polyakov first described its overall symmetries and other properties required for his model to make mathematical sense. Then, he worked backwards to the equations. The more symmetries he could describe, the more he could constrain how the underlying equations should look.

Polyakov’s technique is now known as the bootstrap method, characterized by its ability to pull itself up by its own bootstraps and generate knowledge from only a few general properties. “You get something out of nothing,” says Komargodski. Polyakov and his colleagues soon managed to bootstrap their way to replicating Onsager’s achievement with the 2D Ising model – but try as they might, they still couldn’t crack the 3D version. “People just thought there was no hope,” says David Poland, a physicist at Yale University. Frustrated, Polyakov moved on to other things, and bootstrap research went dormant.

This is part of the old idea. Bootstrapping, as a strategy, is attributed to Geoffrey Chew who, in the 1960’s, argued that the laws of nature could be deduced entirely from the internal demand that they be self-consistent. In Quanta, Natalie Wolchover explains:

Chew’s approach, known as the bootstrap philosophy, the bootstrap method, or simply “the bootstrap,” came without an operating manual. The point was to apply whatever general principles and consistency conditions were at hand to infer what the properties of particles (and therefore all of nature) simply had to be. An early triumph in which Chew’s students used the bootstrap to predict the mass of the rho meson — a particle made of pions that are held together by exchanging rho mesons — won many converts.

The effort gained greater traction again in 2008 when physicist Slava Rychkov and colleagues at CERN decided to use these methods to build a physics theory that didn’t have a Higgs particle. This turned out not to be necessary (I suppose), but the work was productive none-the-less in the development of bootstrapping techniques.

The symmetries of physical systems at critical points are transformations that, when applied, leave the system unchanged. Particularly important are scaling symmetries, where zooming in or out doesn’t change what you see, and conformal symmetries where the shapes of things are preserved under transformations. The key to Polykov’s work was to realize that different materials, at critical points, have symmetries in common. These bootstrappers are exploring a mathematical theory space, and they seem to be finding that the set of all quantum field theories forms a unique mathematical structure.

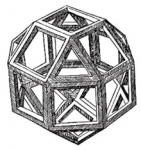

What’s most interesting about all of this is that these physicists are investigating the geometry of a ‘theory space,” where theories live, and where the features of theories can be examined. Nima Arkani-Hamed, Professor of physics at the Institute for Advanced Study has suggested that the space they are investigating could have a polyhedral structure with interesting theories living at the corners. It was also suggested that the polyhedral might encompass the amplituhedron – a geometric object discovered in 2013 that encodes, in its volume, the probabilities of different particle collision outcomes.

Wolchover wrote about the amplituhedron in 2013.

The revelation that particle interactions, the most basic events in nature, may be consequences of geometry significantly advances a decades-long effort to reformulate quantum field theory, the body of laws describing elementary particles and their interactions. Interactions that were previously calculated with mathematical formulas thousands of terms long can now be described by computing the volume of the corresponding jewel-like “amplituhedron,” which yields an equivalent one-term expression.

The decades-long effort is the one to which Chew also contributed. The discovery of the amplituhedron began when some mathematical tricks were employed to calculate the scattering amplitudes of known particle interactions, and theorists Stephen Parke and Tomasz Taylor found a one term expression that could do the work of hundreds of Feynman diagrams that would translate into thousands of mathematical terms. It took about 30 years for the patterns being identified in these simplified expressions to be recognized as the volume of a new mathematical object, now named the amplituhedron. Nima Arkani-Hamed and Jaroslav Trinka published results in 2014.

Again from Wolchover:

Beyond making calculations easier or possibly leading the way to quantum gravity, the discovery of the amplituhedron could cause an even more profound shift, Arkani-Hamed said. That is, giving up space and time as fundamental constituents of nature and figuring out how the Big Bang and cosmological evolution of the universe arose out of pure geometry.

Whatever the future of these ideas, there is something inspiring about watching the mind’s eye find clarifying geometric objects in a sea of algebraic difficulty. The relationship between mathematics and physics, or mathematics and material for that matter, is a consistently beautiful, captivating, and enigmatic puzzle.

Recent Comments