|

|

By Joselle, on May 25th, 2016 In 2011 John Horgan posted a piece on his blog, Cross Check (part of the Scientific American blog network), with the title, Why Information can’t be the basis of reality. There Horgan makes the observation that the “everything-is-information meme violates common sense.” As of last December (at least) he hadn’t changed his mind. He referred back to the information piece in a subsequent post that was, essentially, a critique of Guilio Tononi’s Integrated Information Theory of Consciousness which had been the focus of a workshop that Horgan attended at New York University last November. In that December post Horgan quotes himself making the following argument:

The concept of information makes no sense in the absence of something to be informed—that is, a conscious observer capable of choice, or free will (sorry, I can’t help it, free will is an obsession). If all the humans in the world vanished tomorrow, all the information would vanish, too. Lacking minds to surprise and change, books and televisions and computers would be as dumb as stumps and stones. This fact may seem crushingly obvious, but it seems to be overlooked by many information enthusiasts. The idea that mind is as fundamental as matter—which Wheeler’s “participatory universe” notion implies–also flies in the face of everyday experience. Matter can clearly exist without mind, but where do we see mind existing without matter? Shoot a man through the heart, and his mind vanishes while his matter persists.

What is being overlooked here, however, are the subtleties in a growing, and consistently shifting perspective on information itself. More precisely, what is being overlooked is what information enthusiasts understand information to be and how it can be seen acting in the world around us. Information is no longer defined only through the lens of human-centered learning. But it is promising, as I see it, that information, as it is currently understood, includes human-centered learning and perception. The slow and steady movement toward a reappraisal of what we mean by information inevitably begins with Claude Shannon who in 1948 published The Mathematical Theory of Communication in Bell Labs’ Technical journal. Shannon saw that transmitted messages could be encoded with just two bursts of voltage – an on burst and an off burst, or 0 and 1 – which immediately improved the integrity of transmissions. But, of even greater significance, this binary code made the mathematical framework that could measure the information in a message possible. This measure is known as Shannon’s entropy, as it mirrors the definition of entropy in statistical mechanics which is a statistical measure of thermodynamic entropy. Aloka Jha does a nice job of describing the significance of Shannon’s work in a piece he wrote for The Guardian.

In a Physics Today article physicists Eric Lutz and Sergio Ciliberto begin a discussion of a quirk in the second law of thermodynamics (known as Maxwell’s demon) in this way:

Almost 25 years ago, Rolf Landauer argued in the pages of this magazine that information is physical (see PHYSICS TODAY, May 1991, page 23). It is stored in physical systems such as books and memory sticks, transmitted by physical means – for instance, via electrical or optical signals – and processed in physical devices. Therefore, he concluded, it must obey the laws of physics, in particular the laws of thermodynamics.

But Maxwell’s demon messes with the second law of thermodynamics. It’s the product of a thought experiment involving a hypothetical, intelligent creature imagined by physicist James Clark Maxwell in 1867. The creature introduces the possibility that the Second Law of Thermodynamics could be violated because of what he ‘knows.’ Lisa Zyga describes Maxwell’s thought experiment nicely in a phys.org piece that reports on related findings:

In the original thought experiment, a demon stands between two boxes of gas particles. At first, the average energy (or speed) of gas molecules in each box is the same. But the demon can open a tiny door in the wall between the boxes, measure the energy of each gas particle that floats toward the door, and only allow high-energy particles to pass through one way and low-energy particles to pass through the other way. Over time, one box gains a higher average energy than the other, which creates a pressure difference. The resulting pushing force can then be used to do work. It appears as if the demon has extracted work from the system, even though the system was initially in equilibrium at a single temperature, in violation of the second law of thermodynamics.

I’m guessing that Horgan would find this consideration foolish. But Maxwell didn’t.

And I would like to suggest that this is because a physical law is not something that is expected to hold true only from our perspective. Rather, it should be impossible to violate a physical law. But it has now become possible to test Maxwell’s concern in the lab. And recent experiments shed light, not only on the law, but also how one can understand the nature of information. While all of the articles or papers referenced in this post are concerned with Maxwell’s demon, what they inevitably address is a more precise and deeper understanding of the nature and physicality of what we call information.

On 30 December 2015, Physical Review Letters published a paper that presents an experimental realization of “an autonomous Maxwell’s demon.” Theoretical physicist Sebastian Deffner. who wrote a companion piece for that paper, does a nice history of the problem.

Maxwell’s demon was an instant source of fascination and led to many important results, including the development of a thermodynamic theory of information. But a particularly important insight came in the 1960s from the IBM researcher Rolf Landauer. He realized that the extra work that can be extracted from the demon’s action has a cost that has to be “paid” outside the gas-plus-demon system. Specifically, if the demon’s memory is finite, it will eventually overflow because of the accumulated information that has to be collected about each particle’s speed. At this point, the demon’s memory has to be erased for the demon to continue operating—an action that requires work. This work is exactly equal to the work that can be extracted by the demon’s sorting of hot and cold particles. Properly accounting for this work recovers the validity of the second law. In essence, Landauer’s principle means that “information is physical.” But it doesn’t remove all metaphysical entities nor does it provide a recipe for building a demon. For instance, it is fair to ask: Who or what erases the demon’s memory? Do we need to consider an über-demon acting on the demon?

About eighty years ago, physicist Leo Szilard proposed that it was possible to replace the human-like intelligence that Maxwell had described with autonomous, possibly mechanical, systems that would act like the demon but fully obey the laws of physics. A team of physicists in Finland led by Jukka Pekola did that last year.

According to Deffner,

The researchers showed that the demon’s actions make the system’s temperature drop and the demon’s temperature rise, in agreement with the predictions of a simple theoretical model. The temperature change is determined by the so-called mutual information between the system and demon. This quantity characterizes the degree of correlation between the system and demon; or, in simple terms, how much the demon “knows” about the system.

We now have an experimental system that fully agrees with our simple intuition—namely that information can be used to extract more work than seemingly permitted by the original formulations of the second law. This doesn’t mean that the second law is breakable, but rather that physicists need to find a way to carefully formulate it to describe specific situations. In the case of Maxwell’s demon, for example, some of the entropy production has to be identified with the information gained by the demon. The Aalto University team’s experiment also opens a new avenue of research by explicitly showing that autonomous demons can exist and are not just theoretical exercises.

Earlier results (April 2015) were published by Takahiro Sagawa and colleagues. They created the realization of what they called an information heat engine – their version of the demon.

Due to the advancements in the theories of statistical physics and information science, it is now understood that the demon is indeed consistent with the second law if we take into account the role of information in thermodynamics. Moreover, it has been recognized that the demon plays the key role to construct a unified theory of information and thermodynamics. From the modern point of view, the demon is regarded as a feedback controller that can use the obtained information as a resource of the work or the free energy. Such an engine controlled by the demon can be called an information heat engine.

From Lisa Zyga again:

Now in a new paper, physicists have reported what they believe is the first photonic implementation of Maxwell’s demon, by showing that measurements made on two light beams can be used to create an energy imbalance between the beams, from which work can be extracted. One of the interesting things about this experiment is that the extracted work can then be used to charge a battery, providing direct evidence of the “demon’s” activity.

Physicist, Mihai D. Vidrighin and colleagues carried out the experiment at the University of Oxford. Published results are found in a recent issue of Physical Review Letters.

All of these efforts are illustrations of the details needed to demonstrate that information can act on a system, that information needs to be understood in physical terms, and this refreshed view of information inevitably addresses our view of ourselves.

These things and more were brought to bear on a lecture given by Max Tegmark in November 2013 (which took place before some of the results cited here) with the title Thermodynamics, Information and Concsiousness in a Quantum Multiverse. The talk was very encouraging. One of his first slides said:

I think that consciousness is the way information feels when being processed in certain complex ways.

“What’s the fuss about entropy?” he asks. And the answer is that entropy is one of the things crucial to a useful interpretation of quantum mechanical facts. This lecture is fairly broad in scope. From Shannon’s entropy to decoherence, Tegmark explores meaning mathematically. ‘Observation’ is redefined. An observer can be a human observer or a particle of light (like the demons designed in the experiments thus far described). Clearly Maxwell had some intuition about the significance of information and observation when he first described his demon.

Tegmark’s lecture makes the nuances of meaning in physics and mathematics clear. And this is what is overlooked in Horgan’s criticism of the information-is-everything meme. And Tegmark is clearly invested in understanding all of nature, as he says – the stuff we’re looking at, the stuff we’re not looking at, and our own mind. The role that mathematics is playing in the definition of information is certainly mediating this unity. And Tegmark rightly argues that only if we rid ourselves of the duality that separates the mind from everything else can we find a deeper understanding of quantum mechanics, the emergence of the classical world, or even what measurement actually is.

By Joselle, on April 25th, 2016 I just listened to a talk given by Virginia Chaitin that can be found on academia.edu. The title of the talk is A philosophical perspective on a metatheory of biological evolution. In it she outlines Gregory Chaitin’s work on metabiology, which has been the subject of some of my previous posts – here, here, and here. But, since the emphasis of the talk is on the philosophical implications of the theory, I became particularly aware of what metabiology may be saying about mathematics. It is, after all, the mathematics that effects the paradigm shifts that bring about alternative philosophies.

Metabiology develops as a way to answer the question of whether or not one can prove mathematically that evolution, through random mutations and natural selection, is capable of producing the diversity of life forms that exist today. This, in itself, shines the light on mathematics. Proof is a mathematical idea. And in the Preface of Gregory Chaitin’s Proving Darwin he articulates the bigger idea:

The purpose of this book is to lay bare the deep inner mathematical structure of biology, to show life’s hidden mathematical core. (emphasis my own)

And so it would seem clear that metabiology is as much about mathematics as it is about biology, perhaps more so.

Almost every discussion of metabiology addresses some of its philosophical implications, but there are points made in this talk that speak more directly to mathematics’ role in metabiology’s paradigm shifts. For example, Chaitin (Virginia) begins by stressing that metabiology makes different use of mathematics. By this she means that mathematics is not being used to model evolution, but to explore it, crack it open. Results in mathematics suggest a view that removes some of the habitual thoughts associated with what we think we see. She explains that this is possible because metabiology takes advantage of aspects of mathematics that are not widely known or taught – like its logical irreducibility and quasi-experimental nature. She also explains that exploratory strategies can combine or interweave computable and uncomputable steps. And so mathematics here is not being used ‘instrumentally,’ but as a way to express the creativity of evolution by way of its own creative nature. These strategies are some of the consequences of Gödel’s and Turing’s insights.

The fact that metabiology relies so heavily on a post-Gödel and post-Turing understanding of mathematics and computability, puts a spotlight on the depth and significance of these insights, and perhaps points to some yet to be discovered implications of incompleteness. I continue to find it particularly interesting that while both Gödel’s incompleteness theorems and Turing’s identification of the halting problem look like they are pointing to limitations within their respective disciplines, in metabiology, they each clearly inspire new biological paradigms, that could very well lead to new science. Metabiology affirms that our ideas concerning incompleteness, and uncomputability provide insights into nature as well as mathematics and computation. And so these important results from Gödel and Turing describe, not the limitations of mathematics or computers, but the limitations of a perspective, the limitations of a mechanistic point of view. Proofs, Chaitin tells us, are used in metabiology to generate and express novelty. And this is what nature does.

The talk makes the necessary alignment of biology with metabiology and, for the sake of thoroughness, I’ll repeat them here:

- Biology deals in natural software (DNA and RNA) while metabiological software is a computer program.

- In biology, organisms result from processes involving DNA, RNA and the environment while in metabiology the organism is the software itself.

- In biology evolution increases the sophistication of biological lifeforms, while in metabiology evolution is the increase in the information content of algorithmic life forms.

- The challenge to an organism in nature is to survive and adapt, while the challenge in metabiology is to solve a mathematical problem that requires creative uncomputable steps.

- In metabiology evolution is defined by an increase in the information content of an algorithmic life form, and fitness is understood as the growth of conceptual complexity. These are mathematical ideas, and together they create a lense through which we can view evolution.

In a recent paper on conceptual complexity and algorithmic information theory, Gregory Chaitin defines conceptual complexity in this way:

In this essay we define the conceptual complexity of an object X to be the size in bits of the most compact program for calculating X, presupposing that we have picked as our complexity standard a particular fixed, maximally compact, concise universal programming language U. This is technically known as the algorithmic information content of the object X, denoted Hu(X) or simply H(X) since U is assumed fixed. In medieval terms, H(X) is the minimum number of yes/no decisions that God would have to make to create X.

Biological creativity, here, becomes associated with mathematical creativity and is understood as the generation of novelty, which is further understood as the generation of new information content. Virginia Chaitin also tells us that metabiology proposes a hybrid theory that relies on computability (something that can be understood mechanically) and uncomputability (something that cannot).

It seems to me that the application of incompleteness, uncomputability, and undecidability, in any context, serves to prune the mechanistic habits that have grown over the centuries in the sciences, as well as the habits of logic that are thought to lead to true things.

The need to address these issues can be seen even in economics, as explored in a 2008 paper I happened upon by K. Vela Velupillai presented at an International Conference on Unconventional Computation. The abstract of the paper says this:

Economic theory, game theory and mathematical statistics have all increasingly become algorithmic sciences. Computable Economics, Algorithmic Game Theory [Noam Nisan, Tim Roiughgarden, Éva Tardos, Vijay V. Vazirani (Eds.), Algorithmic Game Theory, Cambridge University Press, Cambridge, 2007] and Algorithmic Statistics [Péter Gács, John T. Tromp, Paul M.B. Vitányi, Algorithmic statistics, IEEE Transactions on Information Theory 47 (6) (2001) 2443–2463] are frontier research subjects. All of them, each in its own way, are underpinned by (classical) recursion theory – and its applied branches, say computational complexity theory or algorithmic information theory – and, occasionally, proof theory. These research paradigms have posed new mathematical and metamathematical questions and, inadvertently, undermined the traditional mathematical foundations of economic theory. A concise, but partial, pathway into these new frontiers is the subject matter of this paper.

Mathematical physicist John Baez writes about computability, uncomputability, logic, probability, and truth in a series of posts found here. They’re worth a look.

By Joselle, on March 30th, 2016 A recent issue of New Scientist featured an article by Kate Douglas with the provocative title Nature’s brain: A radical new view of evolution. The limits of our current understanding of evolution, and the alternative view discussed in the article, are summarized in this excerpt:

Any process built purely on random changes has a lot of potential changes to try. So how does natural selection come up with such good solutions to the problem of survival so quickly, given population sizes and the number of generations available?…It seems that, added together, evolution’s simple processes form an intricate learning machine that draws lessons from past successes to improve future performance.

Evolution, as it has been understood, relies on the ideas of variation, selection, and inheritance. But learning uses the past to anticipate the future. Random mutations, on the other hand, are selected by current circumstances. Yet the proposal is that natural selection somehow reuses successful variants from the past. This idea has been given the room to develop, in large part, with the increasing use and broadened development of iterative learning algorithms.

Leslie Valiant, a computational theorist at Harvard University, approached the possibility in his 2013 book, Probably Approximately Correct. There he equated evolution’s action to the learning algorithm known as Bayesian updating.

Richard Watson of the University of Southampton, UK has added a new observation and this is the subject of the New Scientist article. It is that genes do not work independently, they work in concert. They create networks of connections. And a network’s organization is a product of past evolution, since natural selection will reward gene associations that increase fitness. What Watson realized is that the making of connections among genes in evolution, forged in order to produce a fit phenotype, parallels the making of neural networks, or networks of associations built, in the human brain, for problem solving. Watson and his colleagues have been able to go as far as creating a learning model demonstrating that a gene network could make use of generalization when grappling with a problem under the pressure of natural selection.

I can’t help but think of Gregory Chaitin’s random walk through software space, his metabiology, where life is considered evolving software (Proving Darwin). Chiara Marletto’s application of David Deutsch’s constructor theory to biology also comes to mind. Chaitin’s idea is characterized by an algorithmic evolution, Marletto’s by digitally coded information that can act as a constructor, which has what she calls causal power and resiliency.

What I find striking about all of these ideas is the jumping around that mathematics seems to be doing – it’s here, there and everywhere. And, it should be pointed out that these efforts are not just the application of mathematics to a difficult problem. Rather, mathematics is providing a new conceptualization of the problem. It’s reframing the questions as well as the answers. For Chaitin, mathematical creativity is equated with biological creativity. For Deutsch, information is the only independent substrate of everything and, for Marletto, this information-based theory brings biology into fundamental physics.

A NY Times review of Valient’s book, by Edward Frenkel, says this:

The importance of these algorithms in the modern world is common knowledge, of course. But in his insightful new book “Probably Approximately Correct,” the Harvard computer scientist Leslie Valiant goes much further: computation, he says, is and has always been “the dominating force on earth within all its life forms.” Nature speaks in algorithms.

…This is an ambitious proposal, sure to ignite controversy. But what I find so appealing about this discussion, and the book in general, is that Dr. Valiant fearlessly goes to the heart of the “BIG” questions.

That’s what’s going on here. Mathematics is providing the way to precisely explore conceptual analogies to get to the heart of big questions.

I’ll wrap this up with an excerpt from an article by Artuto Carsetti that appeared in the November 2014 issue of Cognitive Processing with the title Life, cognition and metabiology.

Chaitin (2013) is perfectly right to bring the phenomenon of evolution in its natural place which is a place

characterized in a mathematical sense: Nature ‘‘speaks’’ by means of mathematical forms. Life is born from a compromise between creativity and meaning, on the one hand, and, on the other hand, is carried out along the ridges of a specific canalization process that develops in accordance with computational schemes…Hence, the emergence of those particular forms…that are instantiated, for example, by the Fibonacci numbers, by the fractal-like structures etc. that are ubiquitous in Nature. As observers we see these forms but they are at the same time inside us, they pave of themselves our very organs of cognition. (emphasis added)

By Joselle, on January 31st, 2016  A recent discovery in the history of science and mathematics has prompted a number of articles, links to which are provided at the end of this text. Astrophysicist and science historian Mathieu Ossendrijver, of Humboldt University in Berlin, made the observation that ancient Babylonian astronomers calculated Jupiter’s position from the area under a time-velocity graph. He very recently published his findings in the journal Science. Philip Ball reported on the findings in Nature. A recent discovery in the history of science and mathematics has prompted a number of articles, links to which are provided at the end of this text. Astrophysicist and science historian Mathieu Ossendrijver, of Humboldt University in Berlin, made the observation that ancient Babylonian astronomers calculated Jupiter’s position from the area under a time-velocity graph. He very recently published his findings in the journal Science. Philip Ball reported on the findings in Nature.

A reanalysis of markings on Babylonian tablets has revealed that astronomers working between the fourth and first centuries BC used geometry to calculate the motions of Jupiter — a conceptual leap that historians thought had not occurred until fourteenth-century Europe.

Also from Ball,

Hermann Hunger, a specialist on Babylonian astronomy at the University of Vienna, says that the work marks a new discovery. However, he and Ossendrijver both point out that Babylonian mathematicians were well accustomed to geometry, so it is not entirely surprising that astronomers might have grasped the same points. “These findings do not so much show a higher degree of sophistication in geometric thinking, but rather a remarkable ability to apply traditional Babylonian geometric thinking to a new problem”, Hunger says. (emphasis added).

This is learning or cognition at every level – applying an established thought or experience to a new problem.

An NPR report on the discovery takes note of the fact that Jupiter was associated with the Babylonian god Marduk. NPR’s Nell Greenfieldboyce comments:

Of course, these priests wanted to track Jupiter to understand the will of their god Marduk in order to do things like predict future grain harvests. But still, they had the insight to see that the same math used for working with mundane stuff like land could be applied to the motions of celestial objects.

And NYU’s Alexander Jones replies:

They’re, in a way, like modern scientists. In a way, they’re very different. But they’re still coming up with very, you know – things that we can recognize as being like what we value as mathematics and science.

I think Greenfieldboyce’s “but still…” and Jones’ follow-up comment betray an unnecessary dismissal of this non-scientific motivation. Discoveries like this one challenge a number of ideas that represent the consensus of opinion. These include the standard accounts of the history of science and mathematics, how we may or may not understand conceptual development in the individual as well as in various cultures, and also the cultural overlaps that exist in science, mathematics, art, and religion. In any case, in the years that I’ve been teaching mathematics, I’ve tried to reassure my students that there’s a reason that learning calculus can be so difficult. I suggest to them that the development of calculus relies on cognitive shift, a quantification of change and movement, or of time and space. And the attention that has been given this Babylonian accomplishment highlights that fact. The accomplishment is stated most clearly at the end of Ossendrijver’s paper:

Ancient Greek astronomers such as Aristarchus of Samos, Hipparchus, and Claudius Ptolemy also used geometrical methods, while arithmetical methods are attested in the Antikythera mechanism and in Greco-Roman astronomical papyri from Egypt. However, the Babylonian trapezoid procedures are geometrical in a different sense than the methods of the mentioned Greek astronomers, since the geometrical figures describe configurations not in physical space but in an abstract mathematical space defined by time and velocity (daily displacement). (emphasis added)

The distinction being made here is the difference between imagining the idealized spatial figures of geometry against the spatial arrangement of observed celestial objects versus imagining the idealized spatial figures of geometry as a description of the relations within a purely numeric set of measurements. There’s a big difference, a major difference, that open paths to modern mathematics.

Ossendrijver also describes, a bit more specifically, the 14th century European scholars whose work, until now, was seen as the first use of these techniques:

The “Oxford calculators” of the 14th century CE, who were centered at Merton College, Oxford, are credited with formulating the “Mertonian mean speed theorem” for the distance traveled by a uniformly accelerating body, corresponding to the modern formula s = t•(v0 + v1)/2, where v0 and v1 are the initial and final velocities. In the same century Nicole Oresme, in Paris, devised graphical methods that enabled him to prove this relation by computing it as the area of a trapezoid of width t and heights v0 and v1

Mark Thakkar wrote an article on these calculators for a 2007 issue of Oxford Today with emphasis on the imaginative nature of their analyses.

These scholars busied themselves with quantitative analyses of qualities such as heat, colour, density and light. But their experiments were those of the imagination; practical experiments would have been of little help in any case without suitable measuring instruments. Indeed, some of the calculators’ works, although ostensibly dealing with the natural world, may best be seen as advanced exercises in logic.

Thakkar reminds the reader that these men were philosophers and theologians, many of whom went on “to enjoy high-profile careers in politics or the Church.”

He also says this:

…with hindsight we can see that the calculators made an important advance by treating qualities such as heat and force as quantifiable at all, even if only theoretically. although the problems they set themselves stemmed from imaginary situations rather than actual experiments, they nonetheless ‘introduced mathematics into scholastic philosophy’, as Leibniz put it. This influential move facilitated the full-scale application of mathematics to the real world that characterized the Scientific revolution and culminated triumphantly in Newton’s laws of motion.

I find it worth noting that reports of the ancient Babylonian accomplishment, as well as accounts of thinkers once credited with being the originators of these novel conceptualizations, necessarily include the theological considerations of their cultures.

Links:

Babylonian Astronomers Used Geometry to Track Jupiter

Full paper

NYTimes article

NPR

By Joselle, on December 31st, 2015 Quanta magazine has a piece on a recent conference in Munich where scientists and philosophers discussed the history and future of scientific inquiry. The meeting seems to have been mostly motivated by two things. The first of these is found in the diminishing prospects for physics experiments – energy levels that can’t be reached by accelerators and the limits of our cosmic horizon. The second is the debate over the value of untesteable theories like string theory. Speakers and program can be found here.

But the underlying issues are, unmistakably, very old epistemological questions about the nature of truth and the acquisition of knowledge. And these questions highlight the impossible unraveling of mathematics and science.

Natalie Wolchover writes:

The crisis, as Ellis and Silk tell it, is the wildly speculative nature of modern physics theories, which they say reflects a dangerous departure from the scientific method. Many of today’s theorists — chief among them the proponents of string theory and the multiverse hypothesis — appear convinced of their ideas on the grounds that they are beautiful or logically compelling, despite the impossibility of testing them. Ellis and Silk accused these theorists of “moving the goalposts” of science and blurring the line between physics and pseudoscience. “The imprimatur of science should be awarded only to a theory that is testable,” Ellis and Silk wrote, thereby disqualifying most of the leading theories of the past 40 years. “Only then can we defend science from attack.”

Unfortunately, defending science from attack has become more urgent and often contributes to the debate, even if not acknowledged as a concern.

Reference was made to Karl Popper who, in the 1930s used falsifiablity as the criterion for establishing whether a theory was scientific or not. But the perspective reflected in Bayesian statistics has become an alternative.

Bayesianism, a modern framework based on the 18th-century probability theory of the English statistician and minister Thomas Bayes. Bayesianism allows for the fact that modern scientific theories typically make claims far beyond what can be directly observed — no one has ever seen an atom — and so today’s theories often resist a falsified-unfalsified dichotomy. Instead, trust in a theory often falls somewhere along a continuum, sliding up or down between 0 and 100 percent as new information becomes available. “The Bayesian framework is much more flexible” than Popper’s theory, said Stephan Hartmann, a Bayesian philosopher at LMU. “It also connects nicely to the psychology of reasoning.”

When Wolchover made the claim that rationalism guided Einstein toward his theory of relativity, I started thinking beyond the controversary over the usefulness of string theory.

“I hold it true that pure thought can grasp reality, as the ancients dreamed,” Einstein said in 1933, years after his theory had been confirmed by observations of starlight bending around the sun.

This reference to ‘the ancients’ brought me back to my recent preoccupation with Platonism. The idea that pure thought can grasp reality is a provocative one, full of hidden implications about the relationship between thought and reality that have not been explored. It suggests that thought itself has some perceiving function, some way to see. It reminds me again of Leibniz’s philosophical dream where he found himself in a cavern with “little holes and almost imperceptible cracks” through which “a trace of daylight entered.” But the light was so weak, it “required careful attention to notice it.” His account of the action in the cavern (translated by Donald Rutherfore) includes this:

…I began often to look above me and finally recognized the small light which demanded so much attention. It seemed to me to grow stronger the more I gazed steadily at it. My eyes were saturated with its rays, and when, immediately after, I relied on it to see where I was going, I could discern what was around me and what would suffice to secure me from dangers. A venerable old man who had wandered for a long time in the cave and who had had thoughts very similar to mine told me that this light was what is called “intelligence” or “reason” in us. I often changed position in order to test the different holes in the vault that furnished this small light, and when I was located in a spot where several beams could be seen at once from their true point of view, I found a collection of rays which greatly enlightened me. This technique was of great help to me and left me more capable of acting in the darkness.

It reminds me also of Plato’s simile of the sun, where Plato observes that sight is bonded to something else, namely light, or the sun itself.

Then the sun is not sight, but the author of sight who is recognized by sight.

And the soul is like the eye: when resting upon that on which truth and being shine, the soul perceives and understands, and is radiant with intelligence…

Despite the fact that the Munich conference might be attached to the funding prospects for untestable theories, or the need to distinguish scientific theories from things like intelligent design theories, there is no doubt that we are still asking some very old, multi-faceted questions about the relationship between thought and reality. And mathematics is still as the center of the mystery.

By Joselle, on December 17th, 2015 I’ve been reading Rebecca Goldstein’s Incompleteness: The Proof and Paradox of Kurt Gödel which, together with my finding David Mumford’s Why I am a Platonist, has kept me a bit more preoccupied, of late, with Platonism. This is not an entirely new preoccupation. I remember one of my early philosophy teacher’s periodically blurting out, “See, Plato was right!” And I hate to admit that I often wondered, “About what?” But Plato’s idea has become a more pressing issue for me now. It inevitably touches on epistemology, questions about the nature of the mind, as well as the nature of our physical reality. Gödel’s commitment to a Platonic view is particularly striking to me because of how determined he appears to have been about it. Plato, incompleteness, objectivity, they all came to mind again when I saw a recent article in Nature by Davide Castelvcchi on a new connection between Gödel’s incompleteness theorems and unsolvable calculations in quantum physics.

Gödel intended for his proof of mathematics’ incompleteness to serve the notion of objectivity, but it was an objectivity diametrically opposed to the objectivity of the positivists, whose philosophy was gaining considerable momentum at the time. Goldstein quotes from a letter Gödel wrote in 1971 criticizing the direction that positivist thought took.

Some reductionism is correct, [but one should] reduce to (other) concepts and truths, not to sense perceptions….Platonic ideas are what things are to be reduced to.

The objectivity preserved when we restrict a discussion to sense perceptions rests primarily on the fact that we believe that we are seeing things ‘out there,’ that we are seeing objects that we can identify. This in itself is often challenged by the observation that what we see ‘out there’ is completely determined by the body’s cognitive actions. Quantum physics, however, has challenged our claims to objectivity for other reasons, like the wave/particle behavior of light and our inability to measure a particles position and momentum simultaneously. While there is little disagreement about the fact that mathematics manages to straddle the worlds of both thought and material, uniquely and successfully, not much has advanced about why. I often consider that Plato’s view of mathematics has something to say about this.

What impresses me at the moment is that Gödel’s Platonist view and the implications of his Incompleteness Theorems, are important to an epistemological discussion of mathematics, . Gregory Chaitin shares Gödel’s optimistic view of incompleteness, recognizing it as a confirmation of mathematics’ infinite creativity. And in Chaitin’s work on metabiology, described in his book Proving Darwin, mathematical creativity parallels biological creativity – the persistent creative action of evolution. In an introduction to a course on metabiology, Chaitin writes the following:

in my opinion the ultimate historical perspective on the signi�ficance of incompleteness may be that

Gödel opens the door from mathematics to biology.

Gödel’s work concerned formal systems – abstract, symbolic organizations of terms and the relationships among them. Such a system is complete if for, everything that can be stated in the language of the system, either the statement or its negation can be proved within the system. In 1931, Gödel established that, in mathematics, this is not possible. What he proved is that given any consistent axiomatic theory, developed enough to enable the proof of arithmetic propositions, it is possible to construct a proposition that can be neither proved nor disproved by the given axioms. He proved further that no such system can prove its own consistency (i.e. that it is free of contradiction). For Gödel, this strongly supports the idea that the mathematics thus far understood explores a mathematical reality that exceeds what we know of it. Again from Goldstein:

Gödel was able to twist the intelligence-mortifying material of paradox into a proof that leads us to deep insights into the nature of truth, and knowledge, and certainty. According to Gödel’s own Plantonist understanding of his proof, it shows us that our minds, in knowing mathematics, are escaping the limitations of man-made systems, grasping the independent truths of abstract reality.

In 1936, Alan Turing replaced Gödel’s arithmetic-based formal system with carefully described hypothetical devices. These devices would be able to perform any mathematical computation that could be represented as an algorithm. Turing then proved that there exist problems that cannot be effectively computed by such a device (now known as a ‘Turing machine’) and that it was not possible to devise a Turing machine program that could determine, within a finite time, if the machine would produce an output given some arbitrary input (the halting problem).

The paper that Castelvecchi discusses is one which brings Gödel’s theorems to quantum mechanics:

In 1931, Austrian-born mathematician Kurt Gödel shook the academic world when he announced that some statements are ‘undecidable’, meaning that it is impossible to prove them either true or false. Three researchers have now found that the same principle makes it impossible to calculate an important property of a material — the gaps between the lowest energy levels of its electrons — from an idealized model of its atoms.

Here, our ability to comprehend a quantum state of affairs and our ability to observe are tightly knit.

Again from Castelvecchi:

Since the 1990s, theoretical physicists have tried to embody Turing’s work in idealized models of physical phenomena. But “the undecidable questions that they spawned did not directly correspond to concrete problems that physicists are interested in”, says Markus Müller, a theoretical physicist at Western University in London, Canada, who published one such model with Gogolin and another collaborator in 2012.

The work described in the article concerns what’s called the spectral gap, which is the gap between the lowest energy level that electrons can occupy and the next one up. The presence or absence of the gap determines some of the material’s properties. The paper’s first author, Toby S. Cubitt, is a quantum information theorist and the paper is a direct application of Turing’s work. In other words, a Turing machine is constructed where the spectral gap depends on the outcome of a halting problem.

What I find striking is that the objectivity issues raised by the empiricists and positivists of the 1920s are not the same as the objectivity issues raised by quantum mechanics. But the notion of undecidability must be deep indeed. It persists and continues to be relevant.

By Joselle, on November 30th, 2015

I’m not sure what led me to David Mumford’s Why I am a Platonist, which appeared in a 2008 issue of the European Mathematical Society (EMS) Newsletter, but I’m happy I found it. David Mumford is currently Professor Emeritus at Brown and Harvard Universities. The EMS piece is a clear and straightforward exposition of Mumford’s Platonism, which he defines in this way:

The belief that there is a body of mathematical objects, relations and facts about them that is independent of and unaffected by human endeavors to discover them.

Mumford steers clear of the impulse to place these objects, relations, and facts somewhere, like outside time and space. He relies, instead, on the observation that the history of mathematics seems to tell us that mathematics is “universal and unchanging, invariant across time and space.” He illustrates this with some examples, and uses the opportunity to also argue for a more multicultural perspective on the history of mathematics than is generally taught.

But Mumford’s piece is more than a piece on what many now think is an irrelevant debate about whether mathematics is created or discovered. As the essay unwinds, he begins to talk about the kind of thing that, in many ways, steers the direction of my blog.

So if we believe that mathematical truth is universal and independent of culture, shouldn’t we ask whether this is uniquely the property of mathematical truth or whether it is true of more general aspects of cognition? In fact, “Platonism” comes from Plato’s Republic, Book VII and there you find that he proposes “an intellectual world”, a “world of knowledge” where all things pertaining to reason and truth and beauty and justice are to be found in their full glory (cf. http://classics.mit.edu/Plato/republic.8.vii.html).

Mumford briefly discusses the Platonic view of ethical principles and of language, making the observation that all human languages can be translated into each other (“with only occasional difficulties”). This, he argues, suggests a common conceptual substrate. He notes that people have used graphs of one kind or another to organize concepts and proceeds to argue that studies in cognition have extended this same idea into things like semantic nets or Bayesian networks, with the understanding that knowledge is the structure given to the world of concepts. Mathematics, then, is characterized as pure structure, the way we hold it all together.

And here’s the key to this discussion for me. Mumford proposes that the Platonic view can be understood by looking at the body.

Brian Davies argues that we should study fMRI’s of our brains when think about 5, about Gregory’s formula or about Archimedes’ proof and that these scans will provide a scientific test of Platonism. But the startling thing about the cortex of the human brain is how uniform its structure is and how it does not seem to have changed in any fundamental way within the whole class of mammals. This suggests that mental skills are all developments of much simpler skills possessed, e.g. by mice. What is this basic skill? I would suggest that it is the ability to convert the analog world of continuous valued signals into a discrete representation using concepts and to allow these activated concepts to interact via their graphical links. The ability of humans to think about math is one result of the huge expansion of memory in homo sapiens, which allows huge graphs of concepts and their relations to be stored and activated and understood at one and the same time in our brains. (emphasis added)

Mumford ends this piece with what is likely the beginning of another discussion:

How do I personally make peace with what Hersh calls “the fatal flaw” of dualism? I like to describe this as there being two orthogonal sides of reality. One is blood flow, neural spike trains, etc.; the other is the word ‘loyal’, the number 5, etc. But I think the latter is just as real, is not just an epiphenomenon and that mathematics provides its anchor. (emphasis added)

David Mumford now studies the mathematics of vision. He has a blog where he discusses his work on vision, his earlier work in Algebraic Geometry, and other things. In his introductory comments to the section on vision he says the following:

What is “vision”? It is not usually considered as a standard field of applied mathematics but in the last few decades it has assumed an identity of its own as a multi-disciplinary area drawing in engineers, computer scientists, statisticians, psychologists and biologists as well as mathematicians. For me, its importance is that it is a point of entry into the larger problem of the scientific modeling of thought and the brain. Vision is a cognitive skill that, on the one hand, is mastered by many lower animals while, on the other hand, has proved very hard to duplicate on a computer. This level of difficulty makes it an ideal test bed for theorizing on the subtler talents manifested by humans.

The section on vision provides a narrative that describes some of the hows and whys for particular mathematical efforts that are being used. And each one of these disciplines has its own section with links to references.

A 2011 post of mine was inspired, in part by the idea that what Plato was actually saying is consistent with even the most brain-based thoughts on how we come to know anything. I took note of a few statements from what has been called the simile of the sun.

And the power which the eye possesses is a sort of effluence which is dispensed from the sun

Then the sun is not sight, but the author of sight who is recognized by sight

And the soul is like the eye: when resting upon that on which truth and being shine, the soul perceives and understands, and is radiant with intelligence; but when turned toward the twilight of becoming and perishing, then she has opinion only, and goes blinking about, and is first of one opinion and then of another, and seems to have no intelligence.

By Joselle, on November 15th, 2015 Looking through some blog sites that I once frequented (but have recently neglected) I saw that John Horgan’s Cross Check had a piece on George Johnson’s book Fire in the Mind: Science, Faith, and the Search for Order. This quickly caught my attention because Horgan and Johnson figured prominently in my mind in the late 90’s. In the first paragraph Horgan writes:

Fire alarmed me, because it challenged a fundamental premise of The End of Science , which I was just finishing.

In the mid-nineties, I knew that Horgan was a staff writer for Scientific American and I had kept one of his pieces on quantum physics in my file of interesting new ideas. When I heard about The End of Science I got a copy and very much enjoyed it. I had begun writing, and was trying to create a new beginning for myself. This included my decision to leave New York (where I had lived my whole life) and Manhattan in particular, where I had lived for about seventeen years. In the end, it was Johnson’s book that gave my move direction. I wouldn’t just move to a place that was warmer, prettier, and easier. I decided to move to Santa Fe, New Mexico.

In his original review of Fire in the Mind, Horgan produced a perfect summary of the reasons I chose Santa Fe. He reproduced this review on his blog in response to the release of a new edition:

In New Mexico, the mountains’ naked strata compel historical, even geological, perspectives. The human culture, too, is stratified. Scattered in villages throughout the region are Native Americans such as the Tewa, whose creation myths rival those of modern cosmology in their intricacy. Exotic forms of Christianity thrive among both the Indians and the descendants of the Spaniards who settled here several centuries ago. In the town of Truchas, a sect called the Hermanos Penitentes seeks to atone for humanity’s sins by staging mock crucifixions and practicing flagellation.

Lying lightly atop these ancient belief systems is the austere but dazzling lamina of science. Slightly more than half a century ago, physicists at the Los Alamos National Laboratory demonstrated the staggering power of their esoteric formulas by detonating the first atomic bomb. Thirty miles to the south, the Santa Fe Institute was founded in 1985 and now serves as the headquarters of the burgeoning study of complex systems. At both facilities, some of the world’s most talented investigators are seeking to extend or transcend current explanations about the structure and history of the cosmos.

Santa Fe, it seemed, would not only be a nice place to live, it would be a good place to think. But I should stop reminiscing and get to the point, which has to do with Johnson’s book and a few related topics which Horgan pointed to in his suggestions for further reading. Before I look at those suggestions, lets see why they were there.

Horgan characterizes Johnson’s book as “one that raises unsettling questions about science’s claims to truth.” Johnson puts forward a simple description of the view that characterizes Fire in the Mind in the Preface to the new edition.

Our brains evolved to seek order in the world. And when we can’t find it, we invent it. Pueblo mythology cannot compete with astrophysics and molecular biology in attempting to explain the origins of our astonishing existence. But there is not always such a crisp divide between the systems we discover and those we imagine to be true.

Horgan credits Johnson with providing, “an up-to-the-minute survey of the most exciting and philosophically resonant fields of modern research,” and goes on to say, “This achievement alone would make his book worth reading. His accounts of particle physics, cosmology, chaos, complexity, evolutionary biology and related developments are both lyrical and lucid.” But the issues raised, and battered about a bit by Horgan, have to do with what one understands science to be, and what one could mean by truth. Johnson argues that there is a fundamental relationship between the character of pre-scientifc myths and scientific theories. For Horgan, this brought Thomas Kuhn to mind and hence a reference to one of his posts from 2012, What Thomas Kuhn Really Thought about Scientific “Truth.”

While pre-scientific stories about the world are usually definitively distinguished from the scientific view, the impulse to explore them does occur in the scientific community. I, for one, was impressed some years ago when I saw that the sequence of events in the creation story I learned from Genesis somewhat paralleled scientific ideas (light appeared, then light was separated from darkness, sky from water, water from land, then creatures appeared in the water and sky followed by creatures on the land). The effectiveness of scientific theories, however, is generally accepted to be the consequence of the theories being correct. One of the things that inspires books like Johnson’s, however, is that science hasn’t actually diminished the mystery of our existence and our world. The stubborn strangeness of quantum-mechanical physics, the addition of dark matter and dark energy to the cosmos, the surprises in complexity theories, the difficulties understanding consciousness, all of these things stir up questions about the limits of science or even what it means to know anything.

Horgan’s also refers to the use of information theory to solve some of the physic’s mysteries, where information is treated as the fundamental substance of the universe. He links to a piece where he argues that this can’t be true. But I believe Horgan is not seeing the reach of the information theses. According to some theorists, like David Deutsch, information is always ‘instantiated,’ always physical, but always undergoing transformation. It has, however, some substrate independence. Information as such includes the coding in DNA, the properties within quantum mechanical systems, as well as our conceptual systems. On another level, consciousness, is described by Giulio Tononi’s model as integrated information.

The persistence of mystery doesn’t cause me to wonder about whether scientific ideas are true or not. It leads me to ask more fundamental questions like – What is science? How did it happen? Why or how was it perceived that mathematics was the key? I believe that these are the questions lying just beneath Johnson’s narrative.

The development of scientific thinking is an evolution, that is likely part of some larger evolution. It is real, it has meaning and it has consequences. I wouldn’t ask if it’s true. It is what we see when we hone particular skills of perception. Mathematics is how we do it. Like the senses, mathematics builds structure from data, even when those structures are completely beyond reach. When explored directly by the mathematician, he or she probes this structure-building apparatus itself.

I can’t help but interject here something from biologist Humberto Maturana, from a paper published in Cybernetics and Human Knowing where he comments, “..reality is an explanatory notion invented to explain the experience of cognition.”

Relevant here is something else I found as I looked through Scientific American blog posts. An article by Paul Dirac from the May 1963 issue of Scientific American was reproduced in a 2010 post. It begins:

In this article I should like to discuss the development of general physical theory: how it developed in the past and how one may expect it to develop in the future. One can look on this continual development as a process of evolution, a process that has been going on for several centuries.

In the course of talking about quantum theory, Dirac describes Schrodinger’s early work on his famous equation.

Schrodinger worked from a more mathematical point of view, trying to find a beautiful theory for describing atomic events, and was helped by De Broglie’s ideas of waves associated with particles. He was able to extend De Broglie’s ideas and to get a very beautiful equation, known as Schrodinger’s wave equation, for describing atomic processes. Schrodinger got this equation by pure thought, looking for some beautiful generalization of De Broglie’s ideas, and not by keeping close to the experimental development of the subject in the way Heisenberg did.

Johnson ends his new Preface nicely:

As I write this, I can see out my window to the piñon-covered foothills where the Santa Fe Institute continues to explore the science of complex systems—those in which many small parts interact with one another, giving rise to a rich, new level of behavior. The players might be cells in an organism or creatures in an ecosystem. They might be people bartering and selling and unwittingly generating the meteorological gyrations of the economy. They might be the neurons inside the head of every one of us— collectively, and still mysteriously, giving rise to human consciousness and its beautiful obsession to find an anchor in the cosmic swirl.

By Joselle, on October 30th, 2015  I think often about the continuity of things – about the smooth progression of structure, that is the stuff of life, from the microscopic to the macrocosmic. I was reminded, again, of how often I see things in terms of continuums when I listened online to a lecture given by Gregory Chaitin in 2008. In that lecture (from which he has also produced a paper) Chaitin defends the validity and productivity of an experimental mathematics, one that uses the kind of reasoning with which a theoretical physicist would be comfortable. And here he argues: I think often about the continuity of things – about the smooth progression of structure, that is the stuff of life, from the microscopic to the macrocosmic. I was reminded, again, of how often I see things in terms of continuums when I listened online to a lecture given by Gregory Chaitin in 2008. In that lecture (from which he has also produced a paper) Chaitin defends the validity and productivity of an experimental mathematics, one that uses the kind of reasoning with which a theoretical physicist would be comfortable. And here he argues:

Absolute truth you can only approach asymptotically in the limit from below.

For some time now, I have considered this asymptotic approach to truth in a very broad sense, where truth is just all that is. In fact, I tend to understand most things in terms of a continuum of one kind or another. And I have found that research efforts across disciplines increasingly support this view. It is consistent, for example, with the ideas expressed in David Deutsch’s The Beginning of Infinity, where knowledge is equated with information, whether physical (like quantum systems), biological (like DNA) or explanatory (like theory). From this angle, the non-explanatory nature of biological knowledge, like the characteristics encoded in DNA, is distinguished only by its limits. Deutch’s newest project, which he calls constructor theory, relies on the idea that information is fundamental to everything. Constructor theory is meant to get at what Deutsch calls the “substrate independence of information.” It defines a more fundamental level of physics than particles, waves and space-time. And Deutsch expects that this ‘more fundamental level’ will be shared by all physical systems.

In constructor theory, it is information that undergoes consistent transformation – from the attribute of a creature determined by the arrangement a set of nucleic acids, to the symbolic representation of words on a page that begin as electrochemical signals in my brain, to the information transferred in quantum mechanical events. Everything becomes an instance on a continuum of possibilities.

I would argue that another kind of continuum can be drawn from Samir Zeki’s work on the visual brain. Zeki’s investigation of the neural components of vision has led to the study of what he calls neuroesthetics, which re-associates creativity with the body’s quest for knowledge. While neuroesthetics begins with a study of the neural basis of visual art, it inevitably touches on epistemological questions. The institute that organizes this work lists as its first aim:

-to further the study of the creative process as a manifestation of the functions and functioning of the brain. (emphasis added)

The move to associate the execution and appreciation of visual art with the brain is a move to re-associate the body with the complexities of conscious experience. Zeki outlines some of the motivation in a statement on the neuroesthetics website. He sees art as an inquiry through which the artist investigates the nature of visual experience.

It is for this reason that the artist is in a sense, a neuroscientist, exploring the potentials and capacities of the brain, though with different tools.

Vision is understood as a tool for the acquisition of knowledge. (emphasis added)

The characteristic of an efficient knowledge-acquiring system, faced with permanent change, is its capacity to abstract, to emphasize the general at the expense of the particular. Abstraction, which arguably is a characteristic of every one of the many different visual areas of the brain, frees the brain from enslavement to the particular and from the imperfections of the memory system. This remarkable capacity is reflected in art, for all art is abstraction.

If knowledge is understood in Deutsch’s terms, then all of life is the acquisition of knowledge, and the production of art is a biological event. But this use of the abstract, to free the brain of the particular, is present in literature as well, and is certainly operating in mathematics. One can imagine a continuum from retinal images, to our inquiry into retinal images, to visual art and mathematics and the productive entwining of science and mathematics.

Another Chaitin paper comes to mind here – Conceptual Complexity and Algorithmic Information. This paper focuses on the complexity that lies ‘between’ the complexities of the tiny worlds of particle physics and the vast expanses of cosmology, namely the complexity of ideas. The paper proposes a mathematical approach to philosophical questions by defining the conceptual complexity of an object X

to be the size in bits of the most compact program for calculating X, presupposing that we have picked as our complexity standard a particular fixed, maximally compact, concise universal programming language U.

Chaitin then uses this definition to explore the conceptual complexity of physical, mathematical, and biological theories. Particularly relevant to this discussion is his idea that the brain could be a two-level system. In other words, the brain may not only be working at the neuronal level, but also at the molecular level. The “conscious, rational, serial, sensual front-end mind” is fast and the action on this front is in the neurons. The “unconscious, intuitive, parallel, combinatorial back-end mind,” however, is molecular (where there is much greater computing and memory capacity). If this model were correct, it would certainly break down our compartmental view of the body (and the body’s experience). And it would level the playing field, revealing an equivalence among all of the body’s actions that might redirect some of the questions we ask about ourselves and our world.

By Joselle, on September 30th, 2015  My last post focused on the kinds of problems that can develop when abstract objects, created within mathematics, increase in complexity – like the difficulty of wrapping our heads around them, or of managing them without error. I thought it would be interesting to turn back around and take a look at how the seeds of an idea can vary. My last post focused on the kinds of problems that can develop when abstract objects, created within mathematics, increase in complexity – like the difficulty of wrapping our heads around them, or of managing them without error. I thought it would be interesting to turn back around and take a look at how the seeds of an idea can vary.

I became aware only recently that a fairly modern mathematical idea was observed in the social organizations of African communities. Ron Eglash, Professor at the Rensselaer Polytechnic Institute, has a multifaceted interest in the intersections of culture, mathematics, science, and technology. Sometime in the 1980’s Eglash made the observation that aerial views of African villages were fractals and he followed this up with visits to the villages to investigate the patterns.

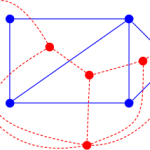

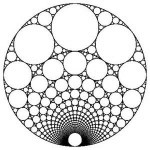

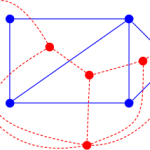

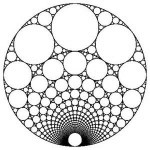

In a 2007 TED talk Eglash describes the content of the fractal patterns displayed by the villages. One of these villages, located in southern Zambia, is made up of a circular pattern of self-similar rings like the rings shown to the left. The whole village is a ring, and on that ring are the rings of individual families and, within each of those rings are the heads of families. In addition to the repetition of the rings that shape the village and the families, is the repetition of the sacred altar spot. There is a sacred altar placed in the same spot in each individual home. And in each family ring, the home of the head of the family is found in the sacred alter spot. In the ring of all families (or the whole village) the Chief’s ring is in the place of the sacred altar and, within the Chief’s ring, the ring for the Chief’s immediate family are in the place of the sacred altar. Within the home that is the chief’s immediate family, ‘a tiny village’ is in the place of the sacred altar. And within this tiny village live the ancestors. It’s a wonderful picture of an infinitely extending self-similar pattern. In a 2007 TED talk Eglash describes the content of the fractal patterns displayed by the villages. One of these villages, located in southern Zambia, is made up of a circular pattern of self-similar rings like the rings shown to the left. The whole village is a ring, and on that ring are the rings of individual families and, within each of those rings are the heads of families. In addition to the repetition of the rings that shape the village and the families, is the repetition of the sacred altar spot. There is a sacred altar placed in the same spot in each individual home. And in each family ring, the home of the head of the family is found in the sacred alter spot. In the ring of all families (or the whole village) the Chief’s ring is in the place of the sacred altar and, within the Chief’s ring, the ring for the Chief’s immediate family are in the place of the sacred altar. Within the home that is the chief’s immediate family, ‘a tiny village’ is in the place of the sacred altar. And within this tiny village live the ancestors. It’s a wonderful picture of an infinitely extending self-similar pattern.

Eglash is clear about the fact that these kinds of scaling patterns are not universal to all indigenous architectures, and also that the diversity of African cultures is fully expressed within the fractal technology:

…a widely shared design practice doesn’t necessarily give you a unity of culture — and it definitely is not “in the DNA.”…the fractals have self-similarity — so they’re similar to themselves, but they’re not necessarily similar to each other — you see very different uses for fractals. It’s a shared technology in Africa.

Certainly it is interesting that before the notion of a fractal in mathematics was formalized, purposeful fractal designs were being used by communities in Africa to organize themselves. But what I find even more provocative is that everything in the life of the village is subject to the scaling. Social, mystical, and spatial (geometric) ideas are made to correspond. This says something about the character of the mechanism being used (the fractals), as well as the culture that developed its use.

While it was brief, Eglash did provide a review of some early math ideas on recursive self-similarity, paying particular attention to the Cantor set and the Koch curve. He made the observation that Cantor did see a correspondence between the infinities of mathematics and God’s infinite nature. But in these recursively produced village designs, that correspondence is embodied in the stuff of everyday life. It is as if the ability to represent recursive self-similarity and the facts of life itself are experienced together. The recursive nature of these village designs didn’t happen by accident. It was clearly understood. As Eglash says in his talk,

…they’re mapping the social scaling onto the geometric scaling; it’s a conscious pattern. It is not unconscious like a termite mound fractal.

Given that the development of these patterns happened outside mathematics proper, and predates mathematics’ formal representation of fractals, questions are inevitably raised about what mathematics is and this is exactly the kind of thing on which ethnomathematics focuses. Eglash is an ethnomathematician. A very brief look at the some of the literature in ethnomathematics reveals a fairly broad range of interests, many of which are oriented toward more successful mathematics education, and many of which are strongly criticized. But it seems to me that the meaning and significance of ethnomathematics has not been made precise. In a 2006 paper, Eglash makes an interesting observation. He considers that the “reticence to consider indigenous mathematical knowledge,” may be related to the “Platonic realism of the mathematics subculture.”

For mathematicians in the Euro-American tradition, truth is embedded in an abstract realm, and these transcendental objects are inaccessible outside of a particular symbolic analysis.

Clearly there will be political questions (related to education issues) tied up in this kind of discussion about what and where mathematics is. But, with respect to these African villages, I most enjoyed seeing a mathematical idea become the vehicle with which to explore and represent infinities.

|

|

Recent Comments